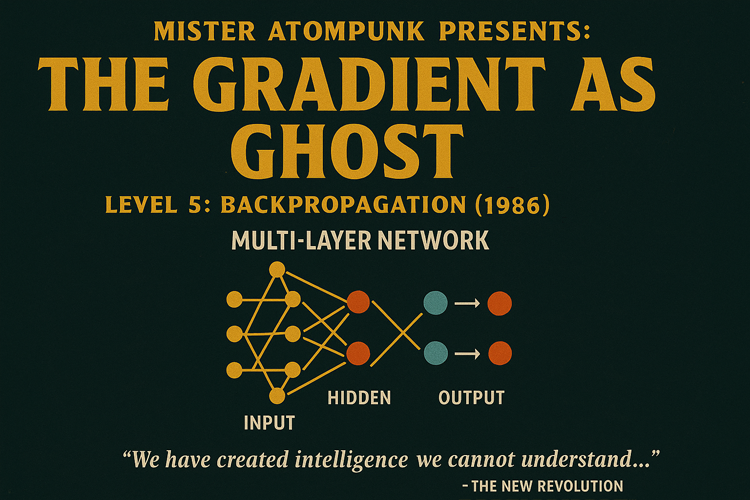

Mister Atompunk Presents: Atomic Almanac Season Zero - Level 5

Mister Atompunk Presents: Atomic Almanac Season Zero

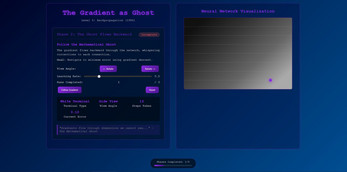

Level 5 — THE GRADIENT AS GHOST

Backpropagation (1986)

“Errors descend the network; gradients rise like a ghost.” — Rumelhart, Hinton & Williams (paraphrase)

What this is

A guided micro-lesson on the breakthrough that resurrected neural nets. Add a hidden layer, follow the gradient downhill, and watch a network finally solve XOR—the problem that froze AI for 15 years. Four short phases; one big idea: learning by sending credit (and blame) backward.

Playthrough

Phase 1 — The Resurrection

Build a 3-layer network. See why adding a hidden layer changes what’s representable.

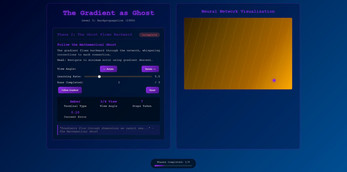

Phase 2 — The Ghost Flows Backward

Ride the “error surface.” Adjust learning rate and watch gradient descent steer parameters to a minimum.

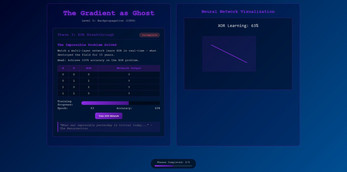

Phase 3 — XOR Breakthrough

Train a tiny network to master XOR in real time. What was once “impossible” becomes trivial.

Phase 4 — Hidden Representations

Probe the hidden layer. The model works—yet what it learned is hard to interpret. Welcome to modern AI.

Controls

-

Buttons to add/reset layers and start training

-

Slider for learning rate

-

Rotate view of the error surface

-

“Probe” to reveal hidden activations

You’ll learn

-

Why single-layer perceptrons fail at XOR—and how hidden layers fix it

-

Gradient descent and backpropagation as credit assignment

-

Error surfaces, learning rate, and convergence

-

The tradeoff: powerful results, limited interpretability

| Status | Released |

| Platforms | HTML5 |

| Author | MisterAtompunk |

| Genre | Educational |

Leave a comment

Log in with itch.io to leave a comment.